import requests

from huggingface_hub import hf_api

import pandas as pd

import matplotlib.pyplot as plt

import richA (very brief) intro to exploring metadata on the Hugging Face Hub

huggingface_hub library to explore metadata on the Hugging Face Hub.

The Hugging Face Hub has become the de facto place to share machine learning models and datasets. As the number of models and datasets grow the challenge of finding the right model or dataset for your needs may become more challenging. There are various ways in which we can try and make it easier for people to find relevant models and datasets. One of these is by associating metadata with datasets and models. This blog post will (very briefly) begin to explore metadata on the Hugging Face Hub. Often you’ll want to explore models and datasets via the Hub website but this isn’t the only way to explore the Hub. As part of the process of exploring metadata on the Hugging Face Hub we’ll briefly look at how we can use the huggingface_hub library to programmatically interact with the Hub.

Library imports

For this post we’ll need a few libraries, pandas, requests and matplotlib are likely old friends (or foes…). The huggingface_hub library might be new to you but will soon become a good friend too! The rich library is fantastically useful for quickly getting familiar with a library (i.e. avoiding reading all the docs!) so we’ll import that too.

%matplotlib inline

plt.style.use("ggplot")We’ll instantiate an instance of the HfApi class.

api = hf_api.HfApi()We can use rich inspect to get a better sense of what a function or class instance is all about. Let’s see what methods the api has.

rich.inspect(api, methods=True)╭──────────────────────────────────── <class 'huggingface_hub.hf_api.HfApi'> ─────────────────────────────────────╮ │ ╭─────────────────────────────────────────────────────────────────────────────────────────────────────────────╮ │ │ │ <huggingface_hub.hf_api.HfApi object at 0x136a2ce80> │ │ │ ╰─────────────────────────────────────────────────────────────────────────────────────────────────────────────╯ │ │ │ │ endpoint = 'https://huggingface.co' │ │ token = None │ │ change_discussion_status = def change_discussion_status(repo_id: str, discussion_num: int, new_status: │ │ Literal['open', 'closed'], *, token: Optional[str] = None, comment: Optional[str] = │ │ None, repo_type: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionStatusChange: Closes or re-opens a Discussion or │ │ Pull Request. │ │ comment_discussion = def comment_discussion(repo_id: str, discussion_num: int, comment: str, *, token: │ │ Optional[str] = None, repo_type: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionComment: Creates a new comment on the given │ │ Discussion. │ │ create_branch = def create_branch(repo_id: str, *, branch: str, token: Optional[str] = None, │ │ repo_type: Optional[str] = None) -> None: Create a new branch from `main` on a repo │ │ on the Hub. │ │ create_commit = def create_commit(repo_id: str, operations: │ │ Iterable[Union[huggingface_hub._commit_api.CommitOperationAdd, │ │ huggingface_hub._commit_api.CommitOperationDelete]], *, commit_message: str, │ │ commit_description: Optional[str] = None, token: Optional[str] = None, repo_type: │ │ Optional[str] = None, revision: Optional[str] = None, create_pr: Optional[bool] = │ │ None, num_threads: int = 5, parent_commit: Optional[str] = None) -> │ │ huggingface_hub.hf_api.CommitInfo: Creates a commit in the given repo, deleting & │ │ uploading files as needed. │ │ create_discussion = def create_discussion(repo_id: str, title: str, *, token: Optional[str] = None, │ │ description: Optional[str] = None, repo_type: Optional[str] = None, pull_request: │ │ bool = False) -> huggingface_hub.community.DiscussionWithDetails: Creates a │ │ Discussion or Pull Request. │ │ create_pull_request = def create_pull_request(repo_id: str, title: str, *, token: Optional[str] = None, │ │ description: Optional[str] = None, repo_type: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionWithDetails: Creates a Pull Request . Pull │ │ Requests created programmatically will be in `"draft"` status. │ │ create_repo = def create_repo(repo_id: str, *, token: Optional[str] = None, private: bool = False, │ │ repo_type: Optional[str] = None, exist_ok: bool = False, space_sdk: Optional[str] = │ │ None) -> str: Create an empty repo on the HuggingFace Hub. │ │ create_tag = def create_tag(repo_id: str, *, tag: str, tag_message: Optional[str] = None, │ │ revision: Optional[str] = None, token: Optional[str] = None, repo_type: │ │ Optional[str] = None) -> None: Tag a given commit of a repo on the Hub. │ │ dataset_info = def dataset_info(repo_id: str, *, revision: Optional[str] = None, timeout: │ │ Optional[float] = None, files_metadata: bool = False, token: Union[bool, str, │ │ NoneType] = None) -> huggingface_hub.hf_api.DatasetInfo: Get info on one specific │ │ dataset on huggingface.co. │ │ delete_branch = def delete_branch(repo_id: str, *, branch: str, token: Optional[str] = None, │ │ repo_type: Optional[str] = None) -> None: Delete a branch from a repo on the Hub. │ │ delete_file = def delete_file(path_in_repo: str, repo_id: str, *, token: Optional[str] = None, │ │ repo_type: Optional[str] = None, revision: Optional[str] = None, commit_message: │ │ Optional[str] = None, commit_description: Optional[str] = None, create_pr: │ │ Optional[bool] = None, parent_commit: Optional[str] = None) -> │ │ huggingface_hub.hf_api.CommitInfo: Deletes a file in the given repo. │ │ delete_folder = def delete_folder(path_in_repo: str, repo_id: str, *, token: Optional[str] = None, │ │ repo_type: Optional[str] = None, revision: Optional[str] = None, commit_message: │ │ Optional[str] = None, commit_description: Optional[str] = None, create_pr: │ │ Optional[bool] = None, parent_commit: Optional[str] = None) -> │ │ huggingface_hub.hf_api.CommitInfo: Deletes a folder in the given repo. │ │ delete_repo = def delete_repo(repo_id: str, *, token: Optional[str] = None, repo_type: │ │ Optional[str] = None): Delete a repo from the HuggingFace Hub. CAUTION: this is │ │ irreversible. │ │ delete_tag = def delete_tag(repo_id: str, *, tag: str, token: Optional[str] = None, repo_type: │ │ Optional[str] = None) -> None: Delete a tag from a repo on the Hub. │ │ edit_discussion_comment = def edit_discussion_comment(repo_id: str, discussion_num: int, comment_id: str, │ │ new_content: str, *, token: Optional[str] = None, repo_type: Optional[str] = None) │ │ -> huggingface_hub.community.DiscussionComment: Edits a comment on a Discussion / │ │ Pull Request. │ │ get_dataset_tags = def get_dataset_tags() -> huggingface_hub.utils.endpoint_helpers.DatasetTags: Gets │ │ all valid dataset tags as a nested namespace object. │ │ get_discussion_details = def get_discussion_details(repo_id: str, discussion_num: int, *, repo_type: │ │ Optional[str] = None, token: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionWithDetails: Fetches a Discussion's / Pull │ │ Request 's details from the Hub. │ │ get_full_repo_name = def get_full_repo_name(model_id: str, *, organization: Optional[str] = None, token: │ │ Union[bool, str, NoneType] = None): │ │ Returns the repository name for a given model ID and optional │ │ organization. │ │ get_model_tags = def get_model_tags() -> huggingface_hub.utils.endpoint_helpers.ModelTags: Gets all │ │ valid model tags as a nested namespace object │ │ get_repo_discussions = def get_repo_discussions(repo_id: str, *, repo_type: Optional[str] = None, token: │ │ Optional[str] = None) -> Iterator[huggingface_hub.community.Discussion]: Fetches │ │ Discussions and Pull Requests for the given repo. │ │ hide_discussion_comment = def hide_discussion_comment(repo_id: str, discussion_num: int, comment_id: str, *, │ │ token: Optional[str] = None, repo_type: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionComment: Hides a comment on a Discussion / Pull │ │ Request. │ │ list_datasets = def list_datasets(*, filter: │ │ Union[huggingface_hub.utils.endpoint_helpers.DatasetFilter, str, Iterable[str], │ │ NoneType] = None, author: Optional[str] = None, search: Optional[str] = None, sort: │ │ Union[Literal['lastModified'], str, NoneType] = None, direction: │ │ Optional[Literal[-1]] = None, limit: Optional[int] = None, cardData: Optional[bool] │ │ = None, full: Optional[bool] = None, token: Optional[str] = None) -> │ │ List[huggingface_hub.hf_api.DatasetInfo]: Get the list of all the datasets on │ │ huggingface.co │ │ list_metrics = def list_metrics() -> List[huggingface_hub.hf_api.MetricInfo]: Get the public list │ │ of all the metrics on huggingface.co │ │ list_models = def list_models(*, filter: Union[huggingface_hub.utils.endpoint_helpers.ModelFilter, │ │ str, Iterable[str], NoneType] = None, author: Optional[str] = None, search: │ │ Optional[str] = None, emissions_thresholds: Optional[Tuple[float, float]] = None, │ │ sort: Union[Literal['lastModified'], str, NoneType] = None, direction: │ │ Optional[Literal[-1]] = None, limit: Optional[int] = None, full: Optional[bool] = │ │ None, cardData: bool = False, fetch_config: bool = False, token: Union[bool, str, │ │ NoneType] = None) -> List[huggingface_hub.hf_api.ModelInfo]: Get the list of all the │ │ models on huggingface.co │ │ list_repo_files = def list_repo_files(repo_id: str, *, revision: Optional[str] = None, repo_type: │ │ Optional[str] = None, timeout: Optional[float] = None, token: Union[bool, str, │ │ NoneType] = None) -> List[str]: Get the list of files in a given repo. │ │ list_spaces = def list_spaces(*, filter: Union[str, Iterable[str], NoneType] = None, author: │ │ Optional[str] = None, search: Optional[str] = None, sort: │ │ Union[Literal['lastModified'], str, NoneType] = None, direction: │ │ Optional[Literal[-1]] = None, limit: Optional[int] = None, datasets: Union[str, │ │ Iterable[str], NoneType] = None, models: Union[str, Iterable[str], NoneType] = None, │ │ linked: bool = False, full: Optional[bool] = None, token: Optional[str] = None) -> │ │ List[huggingface_hub.hf_api.SpaceInfo]: Get the public list of all Spaces on │ │ huggingface.co │ │ merge_pull_request = def merge_pull_request(repo_id: str, discussion_num: int, *, token: Optional[str] = │ │ None, comment: Optional[str] = None, repo_type: Optional[str] = None): Merges a Pull │ │ Request. │ │ model_info = def model_info(repo_id: str, *, revision: Optional[str] = None, timeout: │ │ Optional[float] = None, securityStatus: Optional[bool] = None, files_metadata: bool │ │ = False, token: Union[bool, str, NoneType] = None) -> │ │ huggingface_hub.hf_api.ModelInfo: Get info on one specific model on huggingface.co │ │ move_repo = def move_repo(from_id: str, to_id: str, *, repo_type: Optional[str] = None, token: │ │ Optional[str] = None): Moving a repository from namespace1/repo_name1 to │ │ namespace2/repo_name2 │ │ rename_discussion = def rename_discussion(repo_id: str, discussion_num: int, new_title: str, *, token: │ │ Optional[str] = None, repo_type: Optional[str] = None) -> │ │ huggingface_hub.community.DiscussionTitleChange: Renames a Discussion. │ │ repo_info = def repo_info(repo_id: str, *, revision: Optional[str] = None, repo_type: │ │ Optional[str] = None, timeout: Optional[float] = None, files_metadata: bool = False, │ │ token: Union[bool, str, NoneType] = None) -> Union[huggingface_hub.hf_api.ModelInfo, │ │ huggingface_hub.hf_api.DatasetInfo, huggingface_hub.hf_api.SpaceInfo]: Get the info │ │ object for a given repo of a given type. │ │ set_access_token = def set_access_token(access_token: str): │ │ Saves the passed access token so git can correctly authenticate the │ │ user. │ │ space_info = def space_info(repo_id: str, *, revision: Optional[str] = None, timeout: │ │ Optional[float] = None, files_metadata: bool = False, token: Union[bool, str, │ │ NoneType] = None) -> huggingface_hub.hf_api.SpaceInfo: Get info on one specific │ │ Space on huggingface.co. │ │ unset_access_token = def unset_access_token(): Resets the user's access token. │ │ update_repo_visibility = def update_repo_visibility(repo_id: str, private: bool = False, *, token: │ │ Optional[str] = None, organization: Optional[str] = None, repo_type: Optional[str] = │ │ None, name: Optional[str] = None) -> Dict[str, bool]: Update the visibility setting │ │ of a repository. │ │ upload_file = def upload_file(*, path_or_fileobj: Union[str, bytes, BinaryIO], path_in_repo: str, │ │ repo_id: str, token: Optional[str] = None, repo_type: Optional[str] = None, │ │ revision: Optional[str] = None, commit_message: Optional[str] = None, │ │ commit_description: Optional[str] = None, create_pr: Optional[bool] = None, │ │ parent_commit: Optional[str] = None) -> str: │ │ Upload a local file (up to 50 GB) to the given repo. The upload is done │ │ through a HTTP post request, and doesn't require git or git-lfs to be │ │ installed. │ │ upload_folder = def upload_folder(*, repo_id: str, folder_path: Union[str, pathlib.Path], │ │ path_in_repo: Optional[str] = None, commit_message: Optional[str] = None, │ │ commit_description: Optional[str] = None, token: Optional[str] = None, repo_type: │ │ Optional[str] = None, revision: Optional[str] = None, create_pr: Optional[bool] = │ │ None, parent_commit: Optional[str] = None, allow_patterns: Union[List[str], str, │ │ NoneType] = None, ignore_patterns: Union[List[str], str, NoneType] = None): │ │ Upload a local folder to the given repo. The upload is done │ │ through a HTTP requests, and doesn't require git or git-lfs to be │ │ installed. │ │ whoami = def whoami(token: Optional[str] = None) -> Dict: Call HF API to know "whoami". │ ╰─────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

You’ll see from looking through this there is a bunch of different things we can now do programmatically via the hub. For this post we’re interested in the list_datasets and list_models methods. If we look at one of these we can see it has a bunch of different options we can use when listing datasets or models.

rich.inspect(api.list_models)╭─────────── <bound method HfApi.list_models of <huggingface_hub.hf_api.HfApi object at 0x136a2ce80>> ────────────╮ │ def HfApi.list_models(*, filter: Union[huggingface_hub.utils.endpoint_helpers.ModelFilter, str, Iterable[str], │ │ NoneType] = None, author: Optional[str] = None, search: Optional[str] = None, emissions_thresholds: │ │ Optional[Tuple[float, float]] = None, sort: Union[Literal['lastModified'], str, NoneType] = None, direction: │ │ Optional[Literal[-1]] = None, limit: Optional[int] = None, full: Optional[bool] = None, cardData: bool = False, │ │ fetch_config: bool = False, token: Union[bool, str, NoneType] = None) -> │ │ List[huggingface_hub.hf_api.ModelInfo]: │ │ │ │ Get the list of all the models on huggingface.co │ │ │ │ 28 attribute(s) not shown. Run inspect(inspect) for options. │ ╰─────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

For our use case we want everything, so we set limit=None, we don’t want any filters so we set this to None (this is the default behaviour, but we set them explicitly here to make it clearer for our future selves). We also set full=True so we get back more verbose information about our dataset and models. We also wrap the result in iter and list since the behaviour of these methods will change in future versions to support paging.

hub_datasets = list(iter(api.list_datasets(limit=None, filter=None, full=True)))hub_models = list(iter(api.list_models(limit=None, filter=None, full=True)))Let’s peek at an example of what we get back

hub_models[0]ModelInfo: {

modelId: albert-base-v1

sha: aeffd769076a5c4f83b2546aea99ca45a15a5da4

lastModified: 2021-01-13T15:08:24.000Z

tags: ['pytorch', 'tf', 'albert', 'fill-mask', 'en', 'dataset:bookcorpus', 'dataset:wikipedia', 'arxiv:1909.11942', 'transformers', 'exbert', 'license:apache-2.0', 'autotrain_compatible', 'has_space']

pipeline_tag: fill-mask

siblings: [RepoFile(rfilename='.gitattributes', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='README.md', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='config.json', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='pytorch_model.bin', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='spiece.model', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='tf_model.h5', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='tokenizer.json', size='None', blob_id='None', lfs='None'), RepoFile(rfilename='with-prefix-tf_model.h5', size='None', blob_id='None', lfs='None')]

private: False

author: None

config: None

securityStatus: None

_id: 621ffdc036468d709f174328

id: albert-base-v1

gitalyUid: 4f35551ea371da7a8762caab54319a54ade836044f0ca7690d21e86b159867eb

likes: 1

downloads: 75182

library_name: transformers

}hub_datasets[0]DatasetInfo: {

id: acronym_identification

sha: 173af1516c409eb4596bc63a69626bdb5584c40c

lastModified: 2022-11-18T17:25:49.000Z

tags: ['task_categories:token-classification', 'annotations_creators:expert-generated', 'language_creators:found', 'multilinguality:monolingual', 'size_categories:10K<n<100K', 'source_datasets:original', 'language:en', 'license:mit', 'acronym-identification', 'arxiv:2010.14678']

private: False

author: None

description: Acronym identification training and development sets for the acronym identification task at SDU@AAAI-21.

citation: @inproceedings{veyseh-et-al-2020-what,

title={{What Does This Acronym Mean? Introducing a New Dataset for Acronym Identification and Disambiguation}},

author={Amir Pouran Ben Veyseh and Franck Dernoncourt and Quan Hung Tran and Thien Huu Nguyen},

year={2020},

booktitle={Proceedings of COLING},

link={https://arxiv.org/pdf/2010.14678v1.pdf}

}

cardData: {'annotations_creators': ['expert-generated'], 'language_creators': ['found'], 'language': ['en'], 'license': ['mit'], 'multilinguality': ['monolingual'], 'size_categories': ['10K<n<100K'], 'source_datasets': ['original'], 'task_categories': ['token-classification'], 'task_ids': [], 'paperswithcode_id': 'acronym-identification', 'pretty_name': 'Acronym Identification Dataset', 'train-eval-index': [{'config': 'default', 'task': 'token-classification', 'task_id': 'entity_extraction', 'splits': {'eval_split': 'test'}, 'col_mapping': {'tokens': 'tokens', 'labels': 'tags'}}], 'tags': ['acronym-identification'], 'dataset_info': {'features': [{'name': 'id', 'dtype': 'string'}, {'name': 'tokens', 'sequence': 'string'}, {'name': 'labels', 'sequence': {'class_label': {'names': {'0': 'B-long', '1': 'B-short', '2': 'I-long', '3': 'I-short', '4': 'O'}}}}], 'splits': [{'name': 'train', 'num_bytes': 7792803, 'num_examples': 14006}, {'name': 'validation', 'num_bytes': 952705, 'num_examples': 1717}, {'name': 'test', 'num_bytes': 987728, 'num_examples': 1750}], 'download_size': 8556464, 'dataset_size': 9733236}}

siblings: []

_id: 621ffdd236468d709f181d58

disabled: False

gated: False

gitalyUid: 6570517623fa521aa189178e7c7e73d9d88c01b295204edef97f389a15eae144

likes: 9

downloads: 6074

paperswithcode_id: acronym-identification

}Since we want both models and datasets we create a dictionary which stores the types of item i.e. is it a dataset or a model.

hub_data = {"model": hub_models, "dataset": hub_datasets}We’ll be putting our data inside a pandas DataFrame, so we’ll grab the .__dict__ attribute for each hub item, so it’s more pandas friendly.

hub_item_dict = []

for hub_type, hub_item in hub_data.items():

for item in hub_item:

data = item.__dict__

data["type"] = hub_type

hub_item_dict.append(data)df = pd.DataFrame.from_dict(hub_item_dict)How many hub items do we have?

len(df)151343What info do we have?

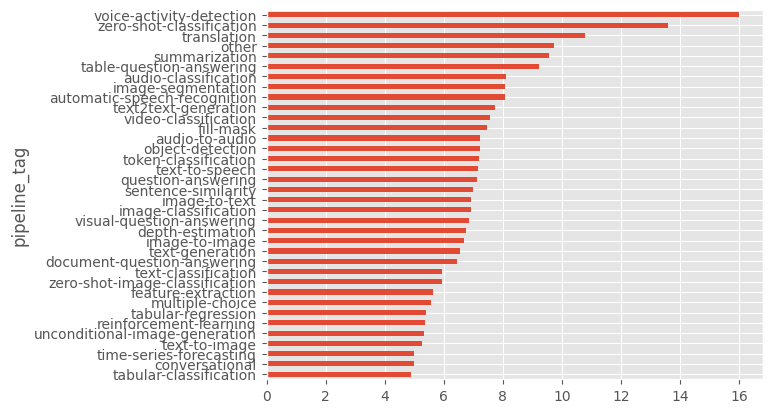

df.columnsIndex(['modelId', 'sha', 'lastModified', 'tags', 'pipeline_tag', 'siblings',

'private', 'author', 'config', 'securityStatus', '_id', 'id',

'gitalyUid', 'likes', 'downloads', 'library_name', 'type',

'description', 'citation', 'cardData', 'disabled', 'gated',

'paperswithcode_id'],

dtype='object')